BUG

Human and AI interaction in built environment

MY ROLE

Creator

Unreal Engine, AI system, VR, Game design, Gesture detection, Immersive experience, Motion Design, Level design

TAGS

DESCIPRTION

"BUG" VR leverages Unreal Engine 5 to redefine immersive interactions, blending real-world actions with VR controller action and body gestures. The idea emerged from a contemplation of the evolving relationship between humans and artificial intelligence growth.

PROJECT OBJECTIVES

> Engage with digital and physical worlds in a seamless and captivating manner

> Enabe players to seamlessly interact with the environment and engage with an AI.

DIGITAL ENVIRONMENT

"Bug" mirrors the profound link between human communication and techonology in a Virtual Reality, blending body gesture and responsive robotics sparks a captivating dialogue between human and machinery.

LOCOMOTION, DYNAMIC CAMERA

The dynamic camera movement is employed to introduce subtle tilts and shifts in the player's field of view, mimicking the organic movements one would feel while walking.

GESTURE DETECTION FLOW

1. Centroid Calculation: The centroid (ci) is determined by averaging the coordinates of the gesture's data points.

2. Point Relocation: All points are adjusted to place the centroid at the origin, ensuring accurate recognition.

3. Scaling: The gesture is scaled within the range of -1 to 1, further enhancing recognition accuracy.

RECOGNITION DOCUMENTATION

LEVEL DESIGN

LEVEL DESIGN

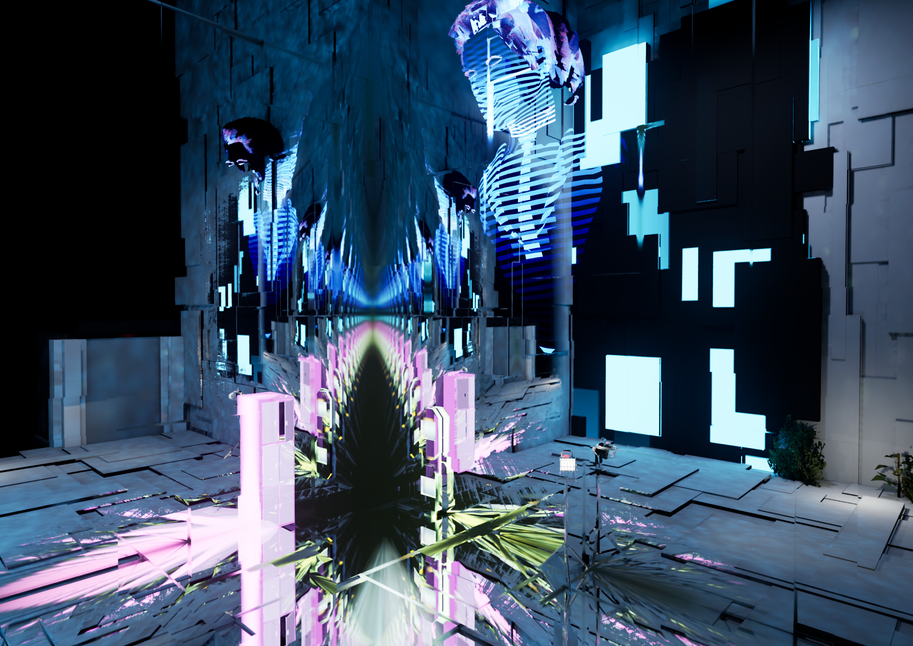

FINAL RENDERING

1st DEMO RECORDING

OTHER PROJECTS

AI OPPONENT DEVELOPMENT

COMPONENTS

The design of AI components empowers an AI-controlled robot avatar to interact with players in real time within a VR environment. Without requiring physical sensors, the AI relies solely on sensing the player’s virtual body and movements, dynamically reacting to actions through advanced perception, stimuli processing, and decision-making. This enables the avatar to respond vividly to a wide range of player behaviors and even predict their next steps, creating an immersive and lifelike interaction.

#1 AI PERCEPTION

#2 AI STIMULI

#3 DETECTION STSTEM

#4 DAMAGE REACTION